I haven’t posted in a while, because I honestly didn’t run into any problem worth posting about. But backing up my wife’s iPhone turned out to be an odyssey I’d rather forget. All I want was copying all pictures that are physically on her phone to my server and some backup hard drives. My first attempt was on her MacBook and I quickly gave up, because I simply don’t speak Mac. Accepting defeat and moving on I grabbed my old Windows laptop and all seemed fine. I connected the iPhone, it showed up in the explorer, marked all the files to copy, CTRL-C, CTRL-V, and… it stopped with the quite famous “Device is Unreachable” error. Tried the usual remedy of changing the photo setting “Transfer to Mac or PC” from “Automatic” to “Keep Originals”:

Continue reading “iPhone Backup Foo”Category: Linux

Teams on Windows grey Overlay Foo

After being forced to work with Windows for a while I finally was able to switch back to Linux. And one of the first things I needed for work was MS Teams. So I updated the already installed version and when I first had to do a screen share I had a flashback, why I switched to use Teams in a VM, rather than the native Linux version: a grey overlay.

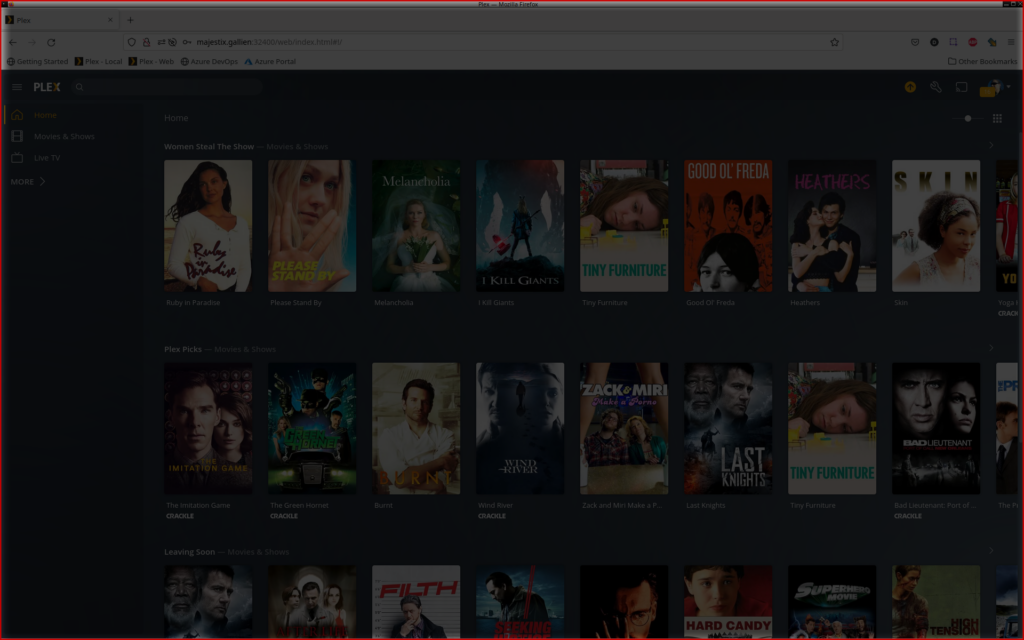

It’s nice to have the red frame, so that I know which screen I am sharing, but the grey overlay makes things very hard to read. Especially when you share a dark themed window. But see for yourself. This is what I got:

OpenVPN in Ubuntu 18.04 Foo within OpenVZ Container (Strato)

It has been a while since my last blog post, but there was no real foo happening to me during that time. But this issue really gave me some heartburn.

Let’s start with the general setup. We have a Linux VM in an OpenVZ environment. The hoster is Strato in Germany, but I don’t think that really matters. There are some known issues with the TUN device not being accessible within the VM, but Strato did their homework and I could see the module:

# lsmod | grep tun

tun 4242 -2

vznetstat 4242 -2 tun,ip_vznetstat,ip6_vznetstatOpenVPN 2.4 CRL Expired Foo

I chose a nice Friday evening and a good Scotch to upgrade an older Ubuntu LTS to the latest and greatest. And all went well, until I wanted to connect one of the clients via VPN. All I saw was this nasty little line in the log files of the server

... VERIFY ERROR: depth=0, error=CRL has expired: ...

Send Email from the Command Line using an External SMTP Server

Sending email in Linux is pretty straight forward, once an email server is set up. Just use mutt or mail and all is good. But sometimes you actually want to test if SMTP is working correctly. And not only on your box, but on a remote box. That is of course easy using a MUA like Thunderbird or Sylpheed, but that is not always feasible on a remote server in a remote network.

Continue reading “Send Email from the Command Line using an External SMTP Server”

MySQL max_connections limited to 214 on Ubuntu Foo

After moving a server to a new machine with Ubuntu 16.10 I received some strange Postfix SMTP errors. Which turned out to be a connection issue to the MySQL server:

postfix/cleanup[30475]: warning: connect to mysql server 127.0.0.1: Too many connections

Oops, did I forgot to up max_connections during the migration:

Continue reading “MySQL max_connections limited to 214 on Ubuntu Foo”

Check SSL Connection Foo

It was that time of the year, when I had to renew some SSL certificates. Renewing and updating them in the server is a nice and easy process. But checking, whether the server is delivering the correct certificate and, that I updated and popluated the intermediate certificates correctly, is a different story.

Nagios Enabling External Command on Debian based Distributions

While debugging my check disk problem after the 15.10 upgrade, I saw that I forgot to enable external commands. That is handy, when you want to re-schedule a check to see, if your changes took effect. Again, something that is easily activated. So if you see something like this, then you might want to make some changes:

Error: Could not stat() command file ‘/var/lib/nagios3/rw/nagios.cmd’!

The external command file may be missing, Nagios may not be running, and/or Nagios may not be checking external commands. An error occurred while attempting to commit your command for processing. Continue reading “Nagios Enabling External Command on Debian based Distributions”

Nagios check_disk Foo on Ubuntu 15.10

Another day another foo, this time done to the check_disk plugin for Nagios on Ubuntu. After updating to 15.10 my disk space check all of a sudden failed with this one here:

DISK CRITICAL - /sys/kernel/debug/tracing is not accessible: Permission denied

Automatic Ubuntu Kernel Clean Up Foo (Update)

Cleaning up old kernel images on a Ubuntu machine is a quite annoying task. If you forget it and you have a separate /boot partition, then you will sooner or later run out of disk space. And then of course all your updates will fail.

Doing the clean up manually is, as mentioned, more than annoying and very tedious. But other smart people have spent some time and created a nice little one-liner that will get rid of old kernel versions. This command line will of course make sure that the currently running kernel is not removed. So it is very important to reboot after a kernel upgrade before you run this script!

And without further ado I present….

dpkg -l 'linux-*' | sed '/^ii/!d;/'"$(uname -r | sed "s/\(.*\)-\([^0-9]\+\)/\1/")"'/d;s/^[^ ]* [^ ]* \([^ ]*\).*/\1/;/[0-9]/!d' | xargs apt-get -y purge

Update:

Not a big deal but a sudo snuck into the xargs call. It is now removed and shouldn’t cause any trouble anymore.